We're a field that loves to count things! As we've matured as a field the numbers have been a great benchmark for us. And they keep getting bigger all the time. Better sample prep, better separation technologies and faster, more sensitive instrumentation is making it possible to generate data in hours what used to take days or weeks not all that long ago.

In papers where we detail these new methodologies, we see one type of protein count filter, and I think we see something a little different when we look at the application of these technologies. If you want to show off how cool your new method is, when you count up the number of proteins you found you are going to go with 1 peptide per protein for certainty. In my past labs we recognized those methods as great advances, but I sure had better have at least 2 good peptides before I justified ordering an antibody!

I don't mean to add to the controversy, by any means. I think using one peptide per protein can be perfectly valid. Heck, we have to trust our single peptide hits when we're doing something like phosphoproteomics! Cause there's just one of them. And if I'm sending my observations downstream for pathway analysis, I'm gonna keep every data point available. I just wanted to point out how the data changes.

I downloaded a really nice dataset the other day.

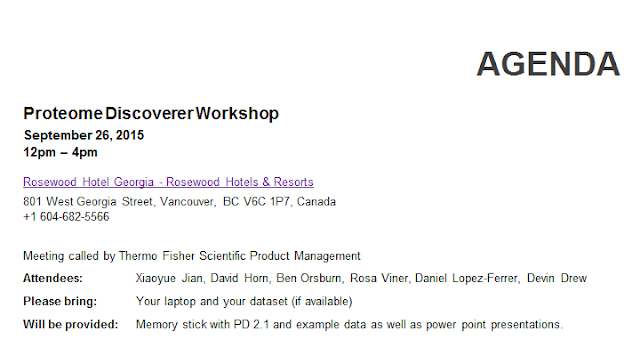

Its from this Max Planck paper and uses the rocket-fast QE HF. I picked one of the best runs from the paper and ran it through my generic Proteome Discoverer 2.x workflow.

In 2 hours I get about 87,000 MS/MS spectra. If I set my peptide-protein so that any single peptide means a protein with this setup I get 5,472 proteins from this run.

Now, if I apply the filter 2 peptides per protein minimum...

Ouch! I lose over 1,200 proteins!

Are they any good?

Okay, this is an extreme outlier, but this protein is annotated in Uniprot, so its a real protein and it only has one peptide! This is 92% coverage! I didn't know there were entries this short in there. If we went 2 peptides/protein we'd never ever see this one.

The best metric here probably is looking at FDR at the protein level. (I did it the lazy target decoy way 1% high confidence/ 5% medium confidence filter)

Its interesting. Of these 1,200 single hit proteins, about 150 of them are red (so...below 95% confidence). Another 150 or so are yellow, but the rest ~900 proteins are scored as high confidence at the protein level.

Okay. I kind of went off the rails a little. Really, what I wanted to take away from this is how very much using a 2 peptide count filter can affect your protein counts. The difference between identifying 5400 proteins and 4200? Thats a big deal and worth keeping in mind. Is your data going to be more confident if you require this filter? Sure. Are you losing some good hits? Sure, but its your experiment and you should get the data out at the level of confidence that you want it!